Intro – Do Unto what the Sister In-law commands

My sister in-law runs a dog training business in Queensland. Part of her business is to track records of her canine clients – especially notes, vaccinations when they’re due, medical records and certificates.

In a previous job she had experience with Animal Shelter Manager (ASM3). She’s familiar with the features and interface to know it will cover her needs.

Her business is not big enough to justify the price of the SaaS version of ASM3; so being the tech savvy (debatable) one the family – it was my task getting it up and running for her.

This lead to several nights of struggling, annoyance and failure.

Fitting the pieces together

To start off I wanted to get a demo version running so I can see what I need to do to deploy it for her.

I checked out the ASM3 github repository and yes! There is a Dockerfile and a docker-compose.yml.

But no – it is six years old and didn’t work when I tried to build it. I tried a couple of other miscellaneous sites offering hope; but to no avail.

In the end after many google searches; I stumbled across https://lesbianunix.dev/about with the following guide:

Apart from a domain name that was sure to set off all the “appropriate content” filters at work; with a few modifications I could get it to work. Looking at the instructions from the author Ræn; it is substantially different to the old Dockerfile and the instructions on the ASM3 home page.

Let’s build it

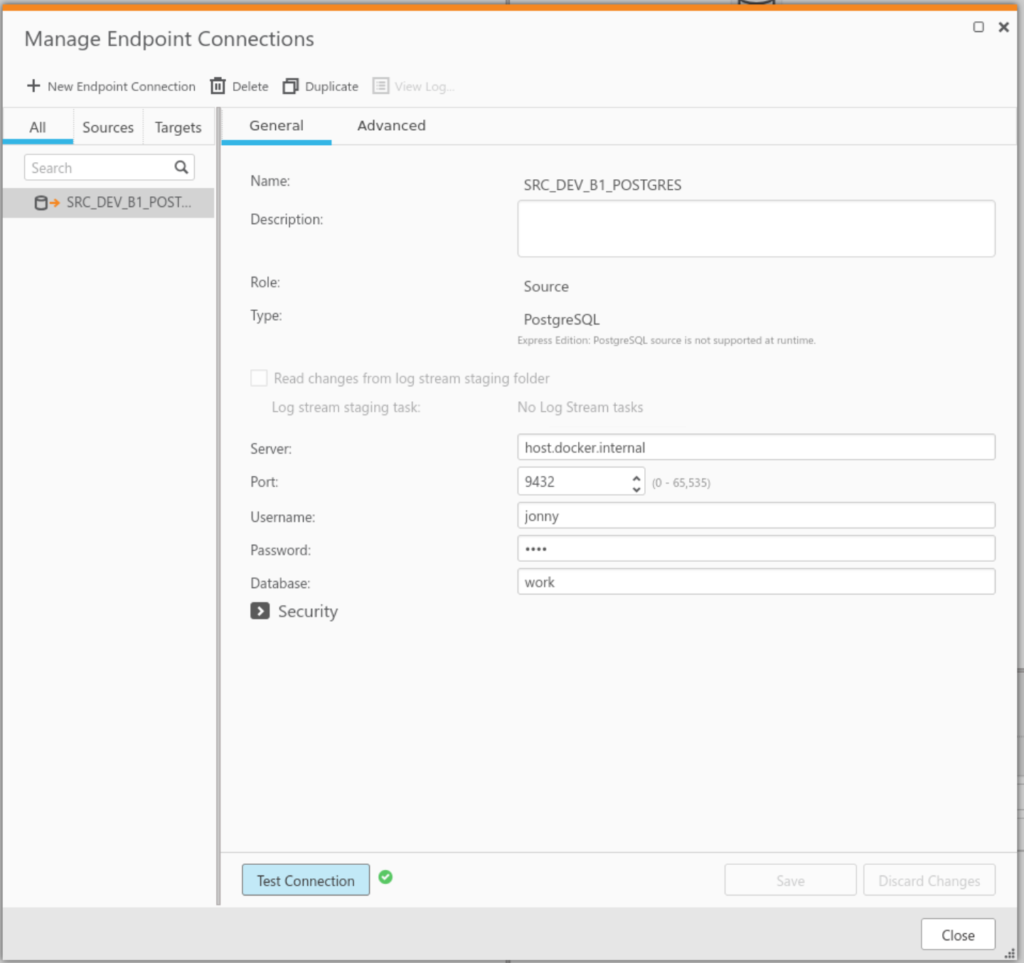

With my base working version – I cobbled up some Dockerfiles for ASM3 and postres and a docker compose file to tie them together:

https://github.com/jon-donker/asm3_docker

(Note that this is not a production version and have to obscure passwords etc in the final version)

The containers build just fine and fire up with no problem.

Vising the website

http://localhost/

ASM3 redirects and builds the database – but then goes to a login page. I enter the username and password; but it loops back to the login page.

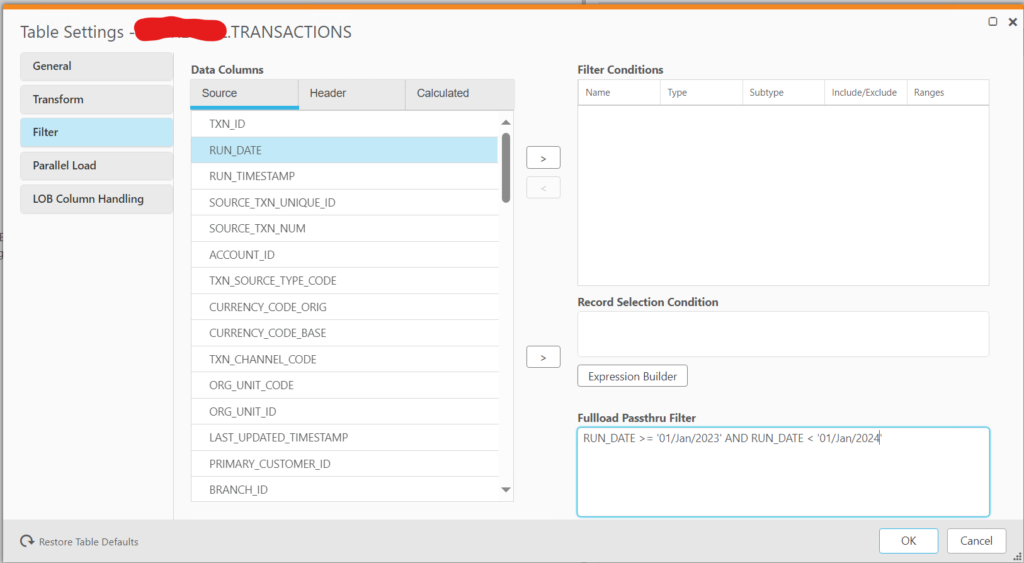

I think the problem lies with the base_url and service_url in asm3.conf; possibly with http-asm3.conf settings.

Anyway – I logged a issue with ASM3 see if it is something simple that I missed; or maybe I have to start pulling apart of source code to find what it is trying to do.

I’ll update this post when I find something.